Hello everyone,

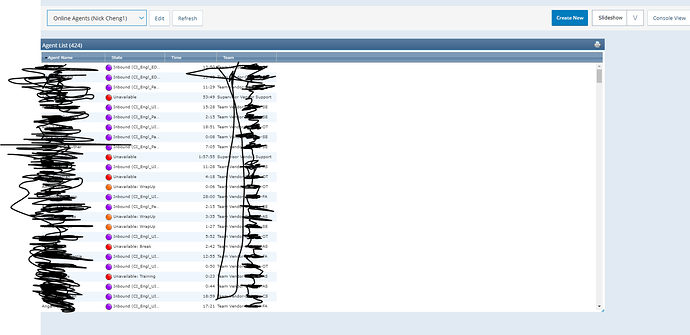

I am trying to get data from a table that is dynamic and refreshes every five seconds. I used the xpath to get the text and store in a csv but by that I am getting only partial records and not full records. I want the whole data to be extracted at once. I have attached the screenshot of the table and my code. I would really appreciate any feedbacks and suggestions.

{

“Command”: “select”,

“Target”: “id=dashboardUserViewList”,

“Value”: “label=Online Agents (Nick1)”,

“Targets”: [

“id=dashboardUserViewList”,

“xpath=//[@id=“dashboardUserViewList”]",

“xpath=//select[@id=‘dashboardUserViewList’]”,

“xpath=//select”,

“css=#dashboardUserViewList”

]

},

{

“Command”: “click”,

“Target”: “id=dashboardUserViewList”,

“Value”: “”,

“Targets”: [

“id=dashboardUserViewList”,

"xpath=//[@id=“dashboardUserViewList”]”,

“xpath=//select[@id=‘dashboardUserViewList’]”,

“xpath=//select”,

“css=#dashboardUserViewList”

]

},

{

“Command”: “pause”,

“Target”: “5000”,

“Value”: “”

},

{

“Command”: “comment”,

“Target”: “store // !replayspeed”,

“Value”: “SLOW”

},

{

“Command”: “store”,

“Target”: “{!runtime}",

"Value": "start"

},

{

"Command": "pause",

"Target": "3000",

"Value": "start"

},

{

"Command": "storeText",

"Target": "/html/body/div[2]/form/div[5]/div[2]/div/div[5]/div",

"Value": "data"

},

{

"Command": "comment",

"Target": "pause // 3000",

"Value": ""

},

{

"Command": "store",

"Target": "{!runtime}”,

“Value”: “end”

},

{

“Command”: “store”,

“Target”: “{end} - {start}”,

“Value”: “elapsed”

},

{

“Command”: “echo”,

“Target”: “{elapsed}",

"Value": ""

},

{

"Command": "store",

"Target": "!replayspeed",

"Value": "FAST"

},

{

"Command": "store",

"Target": "{data}”,

“Value”: “!csvLine”

},

{

“Command”: “csvSave”,

“Target”: “data.csv”,

“Value”: “”

},

{

“Command”: “echo”,

“Target”: “${data}”,

“Value”: “”

},