Our existing local OCRExtractRelative, OCRExtractByTextRelative, OCRExtractScreenshot and OCRSearch can help with most of these questions. But as you said, making a macro run reliably on such a complex screen will take some time with testing and fine-tuning.

So if sending a screenshot to a reliable third party cloud service like Anthropic is acceptable, the aiPrompt command makes the automation a breeze:

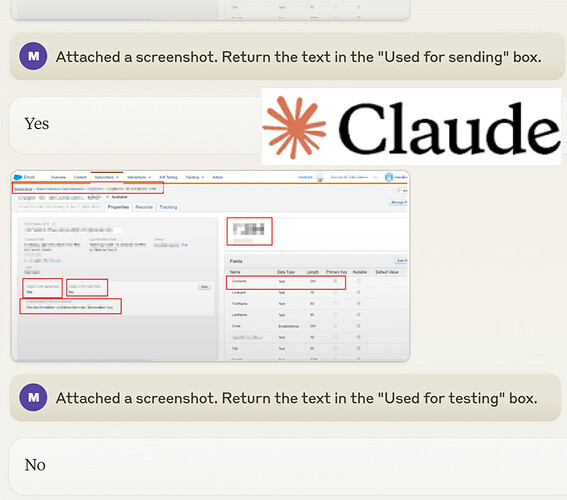

I blurred most data and then tested your screenshot with the aiPrompt command:

aiPrompt | sfmc.png#Attached a UI. Return the name of the primary ID, see the table on the right. | s

Answer: Based on the table shown in the right panel, the primary ID field is ContactId. ![]()

aiPrompt | sfmc.png#Attached a screenshot. Return the text in the "Used for sending" box.| s

Answer: Yes ![]()

aiPrompt | sfmc.png#Attached a screenshot. Return the text in the "Used for testing" box.| s

Answer: No ![]()

Note1: sfmc.png# is the screenshot from the forum post above. I imported it into the Visual tab and then ran the commands on it. During an automation you would most likely take the screenshot on the fly with capturescreenshot | sfmc.png or (re-use) the __last_screenshot.png image. The file __last_screenshot.png is created by all visual commands e. g. XClick, XClickText, visualAssert… . Therefore if you run a visual command before doing the aiPrompt, there is no need to retake the screenshot if the screen did not change.

Note2: You can use the regular Claude.ai chat (Claude 3.5 Sonnet engine) to test your prompts for use with the aiPrompt command: